Helppier’s software architecture performs with high-speed access and works for common web cases, but is it enough for our Mobile SDK?

We are happy to present another article from our Helppier Mobile development article series, and today we are excited to share how we had to adapt our software architecture to ensure the best performance for the Helppier SDK.

Currently, Helppier’s architecture works for the common web use cases, because our systems are usually accessed from high-speed access and cable lines of corporate networks but in the mobile world there are more types of access and some of them are slower and with high latency to our servers. Also, there are different types and capacity levels of the devices (that end users use to access the applications).

How can we provide the best architecture for our end users?

For admin users, our current software architecture is enough, but we needed to test new software architecture to cope with these new requirements for the end users.

We send to the end user two different types of content:

- Static: Main JavaScript files and CSS files of the web application.

- Dynamic: Content created by the admin user. Several optional chunks of javascript are served only when needed (we use dynamic imports in our code to reduce the amount of data sent to the user).

The static files in the current architecture are served from CloudFlare CDN (which has more than 200 locations around the world) and have a decent performance, but the dynamic content is served from our main servers in Europe and our preliminary tests showed us that that is the main bottleneck because every content that is served (an image, file, text, dynamic chunks of javascript) has a round trip between the user device and our server, so the more content there is, the lower the speed and performance.

Some strategies

The first strategy that we tried was to change the way we deliver our code without the dynamic imports so we could cache all the JavaScript code. We tested this option from different geographies around the world and this improved the performance slightly (because all the other content created by the admin user still had the latency problem). Also, this created a side effect: The code bundles were larger, and this had an impact of increasing the time of it took for the device to download the data.

The second strategy that we tried was to try to see if we could cache the dynamic content but even pushing the limits of the current server’s architecture and technology that we used did not allow us to do it.

The next strategy was to change the architecture that we had and add newer strategies (Server-Side Rendering – SSR and Static Site Generation – SSG) to help us to cache all the content and decrease how reliant we were on the user hardware.

When it comes to mobile applications, speed and reliability are key so when developing the SDK, we had to make a choice:

- In terms of raw performance, it would be better to develop an SDK that adds preloaded data during the build process (Static Site Generation), which is meant to be displayed later, based on what is configured by the admin user at the time of the build.

- Regarding high availability and adaptability, an SDK that performs a request on load fetching the most up-to-date content would better fit that requirement

The solution

In order to reach the conditions mentioned above, we implemented the backend as a distributed system based on Cloudflare Workers, achieving the lowest latency possible for every user worldwide and with the highest availability and redundancy available.

Regarding the front end that provides the views to the user, we decided to follow a similar strategy with Server-Side Rendering for each guide where each webpage is prerendered on a separate distributed system. Having the webpage prerendered decreases the amount of processing that the device must process in order to display the bubble, reducing the battery cost, specifications dependency, and overall speed.

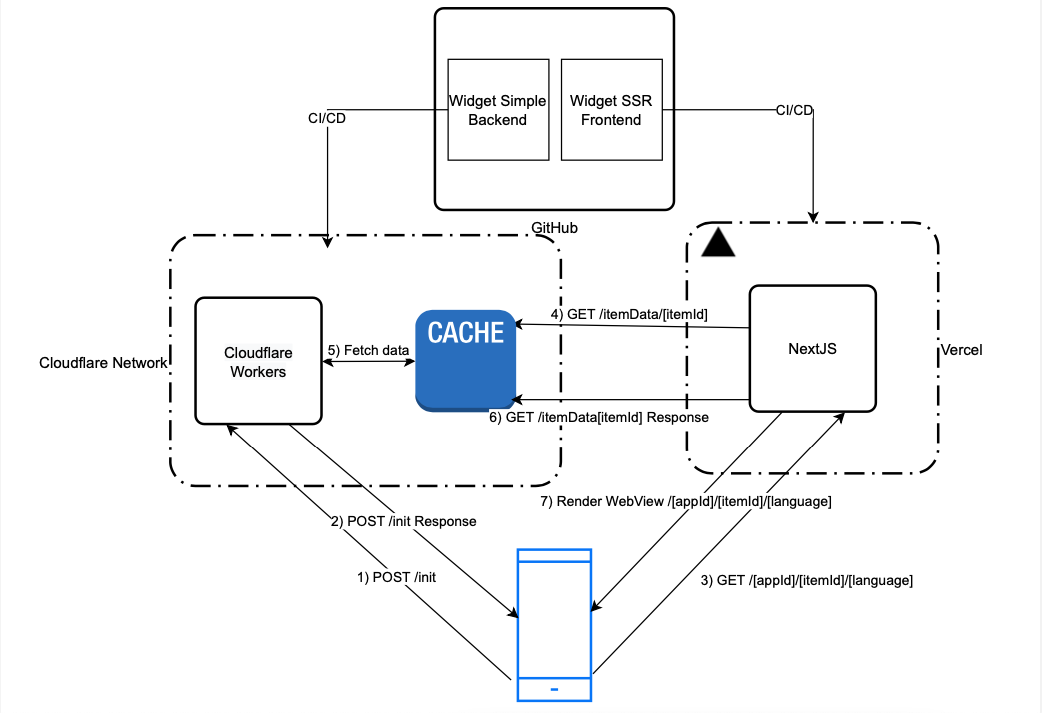

1) Mobile Device requests the nearest Cloudflare Worker what experience should be displayed and with which conditions should it be displayed (in which screen, how long should Helppier wait before it displays, among other options), through an HTTP Request

2) Request on step #1 is answered with the URL that should be displayed in the Webview and the conditions for it to be displayed

3) Mobile device generates a WebView for the retrieved URL. Vercel DNS/Load Balancer assigns the closest server to handle the process.

4) Vercel’s NextJS server instance verifies if it has the page pre-render. If it doesn’t, it requests Cloudflare DNS with the most up-to-date data. Otherwise skips to step #6.

5) Cloudflare’s nearest Cache node verifies if it has a valid response for the request mentioned in step #4. If it doesn’t, requests the data on the closest Worker instance and caches it

6) Cloudflare answers Vercel’s Request (step #4) with verified data and Vercel’s renders the page on the server and caches it.

7) Pre-rendered HTML built on Vercel’s server is now served to the Webview, decreasing how reliant Helppier is on the devices’ speed as the markup already exists and is not waiting for JavaScript to parse and render the page on the client side

What’s next?

We are happy to keep sharing articles regarding the Helppier Mobile development. Please stay tuned to not miss any part of this process. If you haven’t seen our previous articles, please check the begging of this process at our Helppier for Mobile: The pursue for the best mobile SDK article.